Engineering Challenges

The following is an in-depth discussion of 4 interesting engineering challenges we faced while building Otter.

WebRTC and Glare

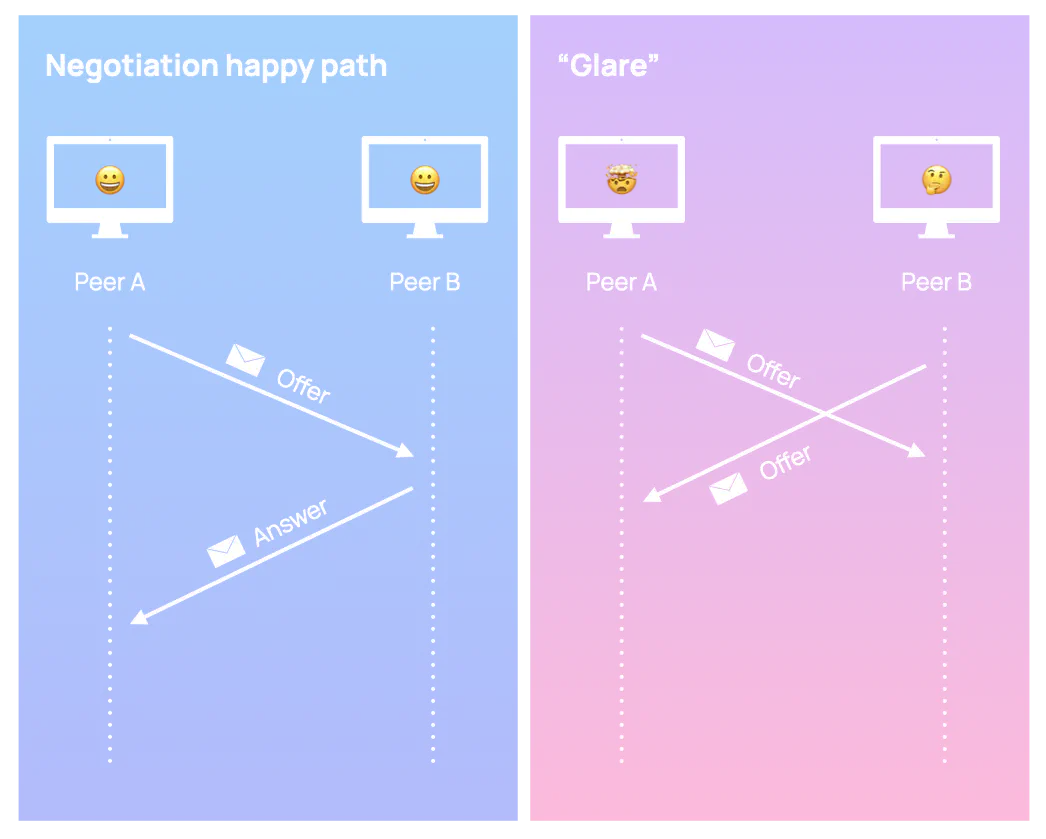

One challenge we faced working with the WebRTC API involved asynchronicity and race conditions. Ideally, the offer-answer process happens in an orderly fashion: one peer sends an offer, the other peer receives it and sends back an answer (if the other peer wishes to establish a P2P connection).

In practice, however, both peers can end up firing offers to each other in a haphazard fashion. This process is called negotiation, and it happens during the initial setup of the WebRTC session and anytime a change to the communication environment requires reconfiguring the session.

Such a change could happen when adding or removing media from a live WebRTC connection. Recall the Session Description object contains information about media types and codecs, so by adding or removing media, a peer’s Session Description has changed. Thus, a new offer needs to be generated and sent to the remote peer.

However, what happens if peers trigger such a change simultaneously? When both peers send offers to each other at the same time it disrupts their state machines and this is known as “glare”.

The default way of negotiating the offer-answer process in WebRTC introduces race conditions that cannot be resolved on their own, resulting in deadlock and errors. User intervention is required, which naturally translates into a poor user experience.

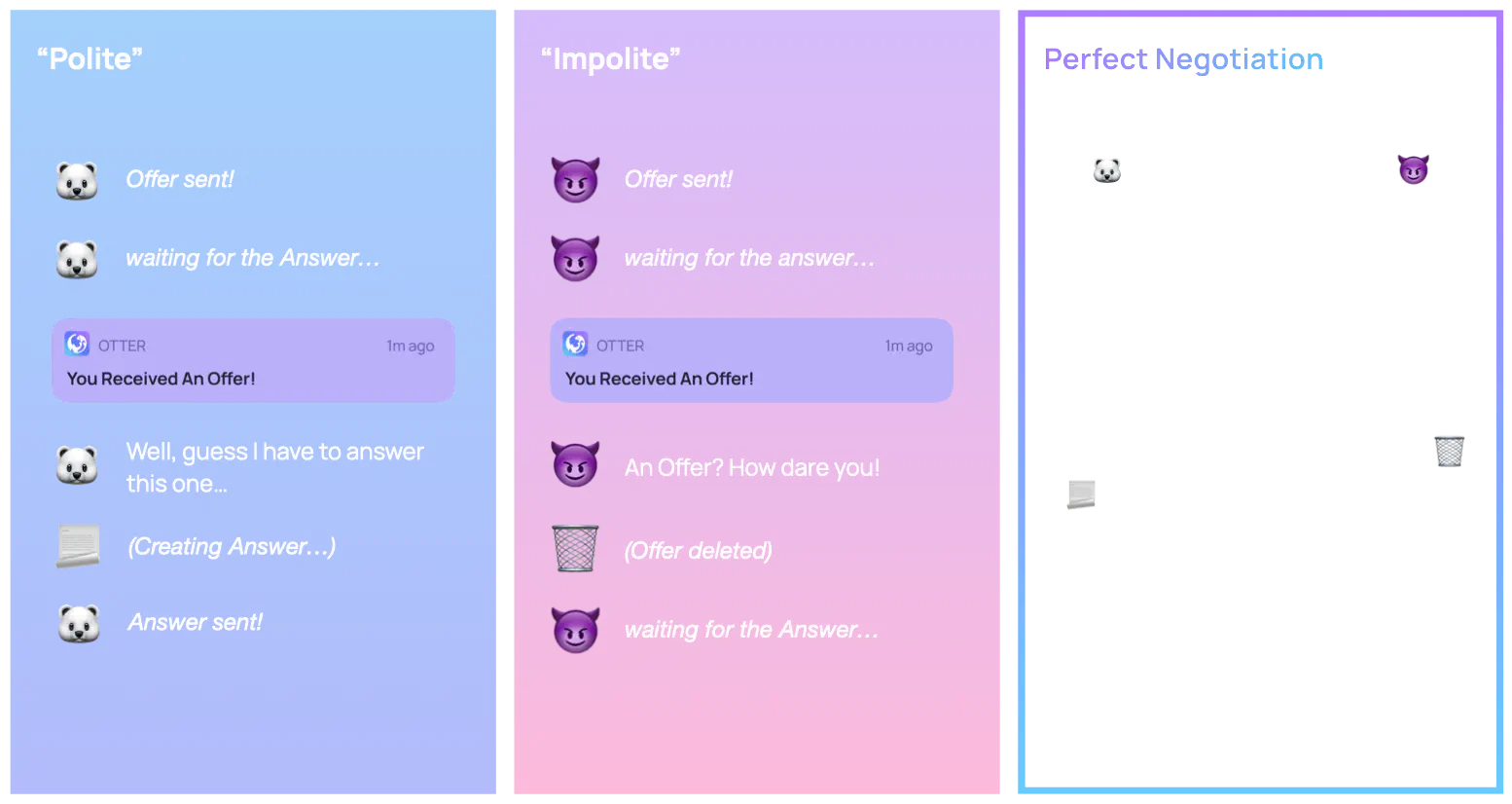

Instead, we need a way to handle glare in a resilient and programmatic manner. We need to isolate the negotiation process from the rest of the application. Enter Perfect Negotiation: a “recommended pattern to manage negotiation transparently, abstracting this asymmetric task away from the rest of the application”. Perfect Negotiation works by assigning roles to either peer, where a peer’s role will specify the behavior to resolve any signaling collisions.

The two assigned roles are polite and impolite. A polite peer rescinds their offer in the face of an incoming offer, whereas an impolite peer ignores an incoming offer if it would collide with their own.

To implement Perfect Negotiation, we needed to add a couple of state variables to our peers: one to keep track if we are in the middle of an operation (recall the offer answer workflow is asynchronous), and another to indicate whether the peer is polite or impolite.

With Perfect Negotiation, we have resolved the race conditions that previously resulted in deadlock and poor user experience. Clients are able to add and remove media streams at will during a WebRTC session.

Signaling & DynamoDB Optimization

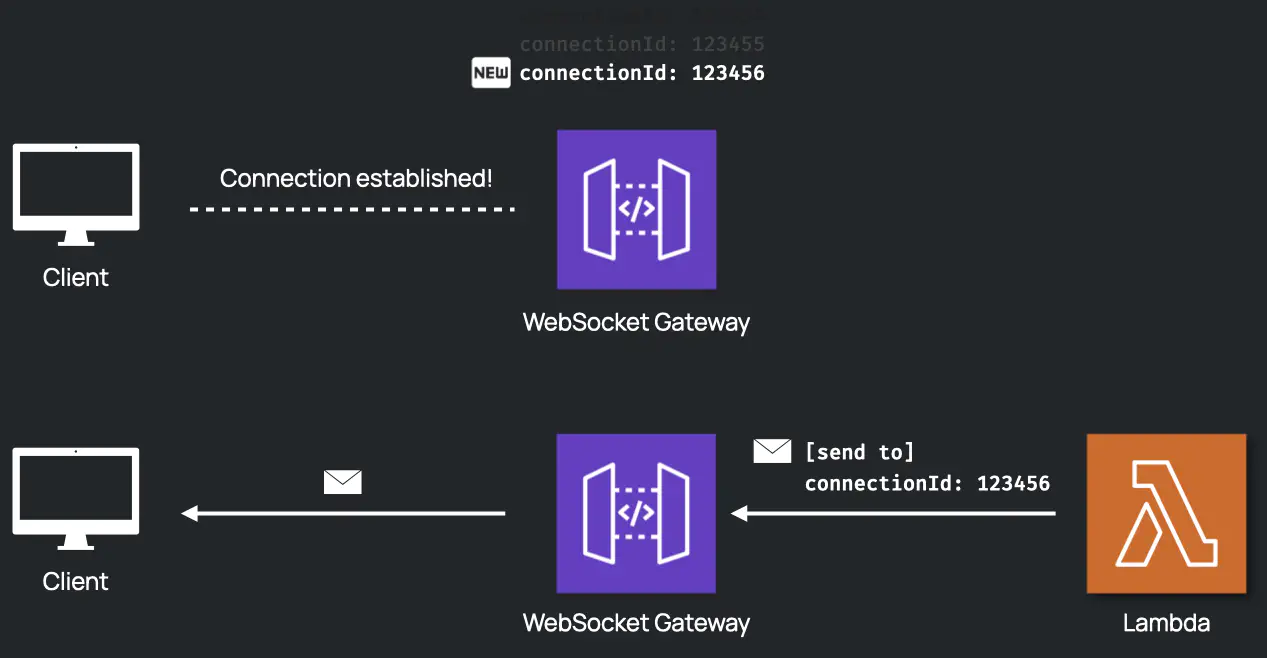

Another challenge we faced was optimizing how signaling Lambdas interacted with DynamoDB. Let’s first understand how signaling Lambdas work with the WebSocket Gateway to send messages to a specific peer.

When a client establishes a connection with the WebSocket Gateway, the gateway creates a connectionId to uniquely identify this connection. Whenever the gateway receives a message from the client, this connectionId will be passed to the Lambda as a property of the context object. To send a message to a client, the Lambda only needs to know the connectionId of the client and make an API call to the gateway.

Recall that Otter has the concept of a “room” representing the virtual space where a session occurs. Every room has a roomId property to uniquely identify it. Otter stores the connectionId in the database with the roomId to keep track of the room a peer resides in. With this simple schema in place, the signaling Lambda can now query the database with the roomId, and pass the message if another peer is present. Initially, the message sent to the signaling Lambda had the following structure:

{

roomId: rm_42Y87ah8w, // used to find out if peer is in the same room

payload: {}

}

Since the signaling Lambda has access to the sender’s connectionId, it can look up the roomId in the database and determine the other peer’s connectionId in order to pass the message to that peer. This approach is simple when sending and handling messages, however it also means the signaling Lambda will query DynamoDB for every message it receives.

Even without cold-starts, a signaling Lambda could take as long as ~1000ms when querying the database, which might be okay if we are only dealing with a few messages occasionally. However, under bursty traffic patterns, such slow performance could easily rack up compute time. Even worse, each peer connecting will send multiple candidate addresses asynchronously to the signaling Lambda. Each address triggers the Lambda to query the database for the same information.

In order to tackle this redundancy, the signaling Lambda should not have to determine the destination connectionId. Instead, we reasoned both “source” and “destination” connectionId‘s should be included in every message sent to the signaling Lambda. With the “destination” property, the signaling Lambda no longer needs to query the database to know where to send the message:

- If the “source” property is

null, the signaling Lambda can obtain theconnectionIdfrom the WebSocket Gateway and fill in the blank. - If the “destination” property is

null, the signaling Lambda can query the database (only once) and fill the blank. - When the client receives a message from the other peer, it stores (or updates) both

connectionIdslocally and includes them when sending all subsequent messages.

Now the messages have the following structure:

{

roomId: rm_42Y87ah8w, // in case destination is unknown, use this to find it out

source: Fj294OwKs026928JK, // the connectionId for the peer that sends this message

destination: KJS992hsdGHs091as, // the connectionId to send this message to

polite: true, // used for perfect negotiation

payload: {}

}

When establishing the initial WebRTC connection, since the client doesn’t know any of the connectionIds, the source and destination properties will be null. As we mentioned earlier, these two properties will be filled by the signaling Lambda. This information is stored locally and used in all subsequent messages.

Given the scenario of a typical P2P session with no reconnection, we managed to reduce the number of database queries from ~20 to just one. This approach reduced the latency caused by Lambda execution from ~1000 ms to ~200ms.

Static Site Generation & Authentication

Another challenge we encountered was how to build and deploy the Otter Web App. In order to join a video call, the Otter Web App has to first send two requests to the Otter API to verify the call is valid and to fetch the CoTURN credentials. Next, the Otter Web App must connect to the Websocket gateway to initiate signaling, and potentially contact the STUN/TURN infrastructure.

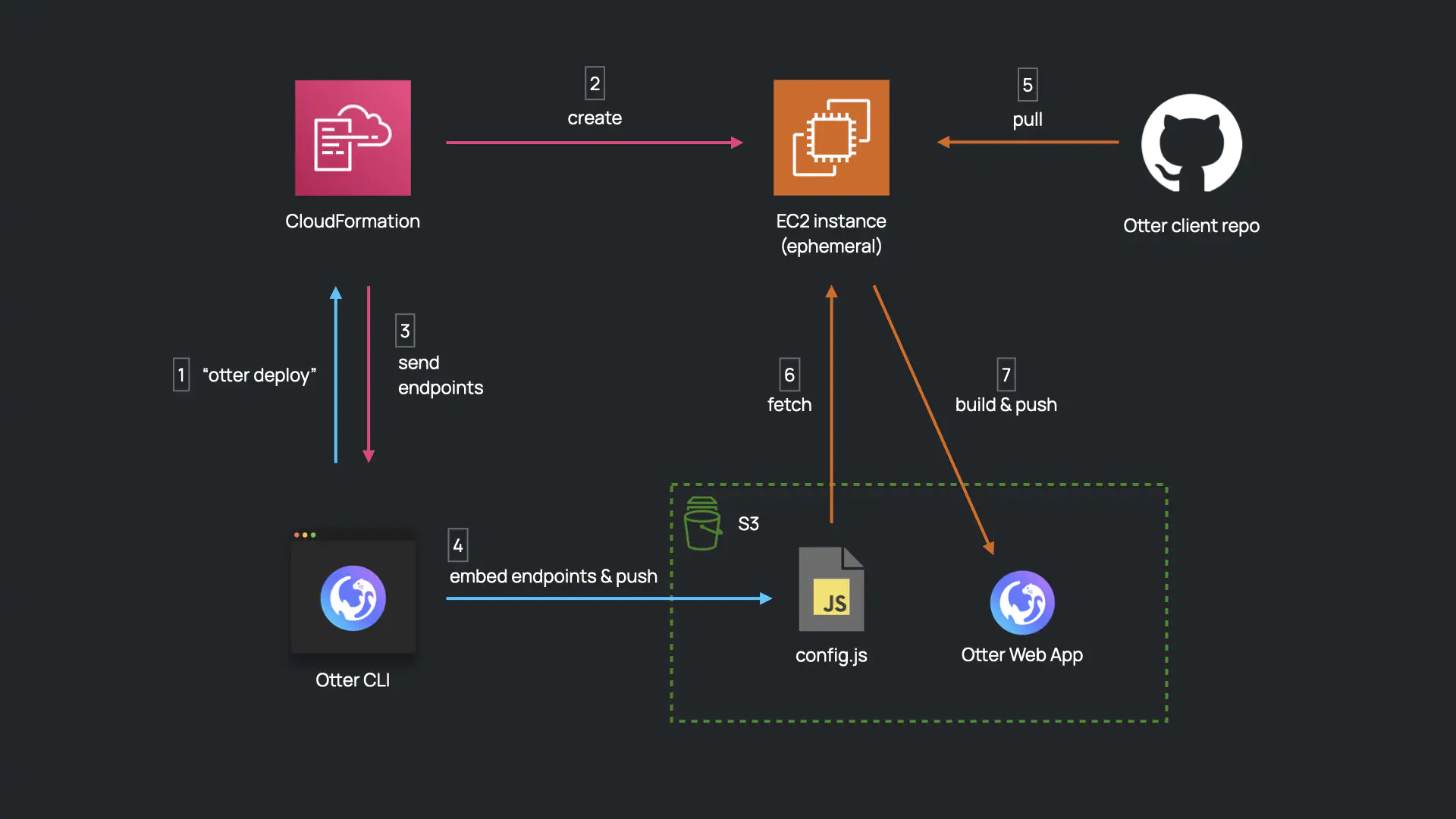

To interact with this infrastructure, the Otter Web App has to know the infrastructure’s endpoints, which means the Otter Web App cannot be built until said infrastructure has been provisioned. Thus, the Otter Web App could not be built in advance, and had to be built during otter deploy.

To make this happen, otter deploy spins up an EC2 instance, and uploads the endpoints for the infrastructure to an S3 bucket. The EC2 instance pulls the code for the Otter Web App from Github, and retrieves the endpoints from the S3 bucket. The EC2 instance then builds the Otter Web App using these endpoints, and publishes it to another S3 bucket, which is associated with Cloudfront. The S3 bucket with the endpoints and the EC2 instance is then terminated, and the website is hosted on Cloudfront.

However, this solution introduced another problem: the endpoints for the video calling infrastructure are exposed in the Javascript that is served to the end user. This highlighted the need to implement authentication within the infrastructure.

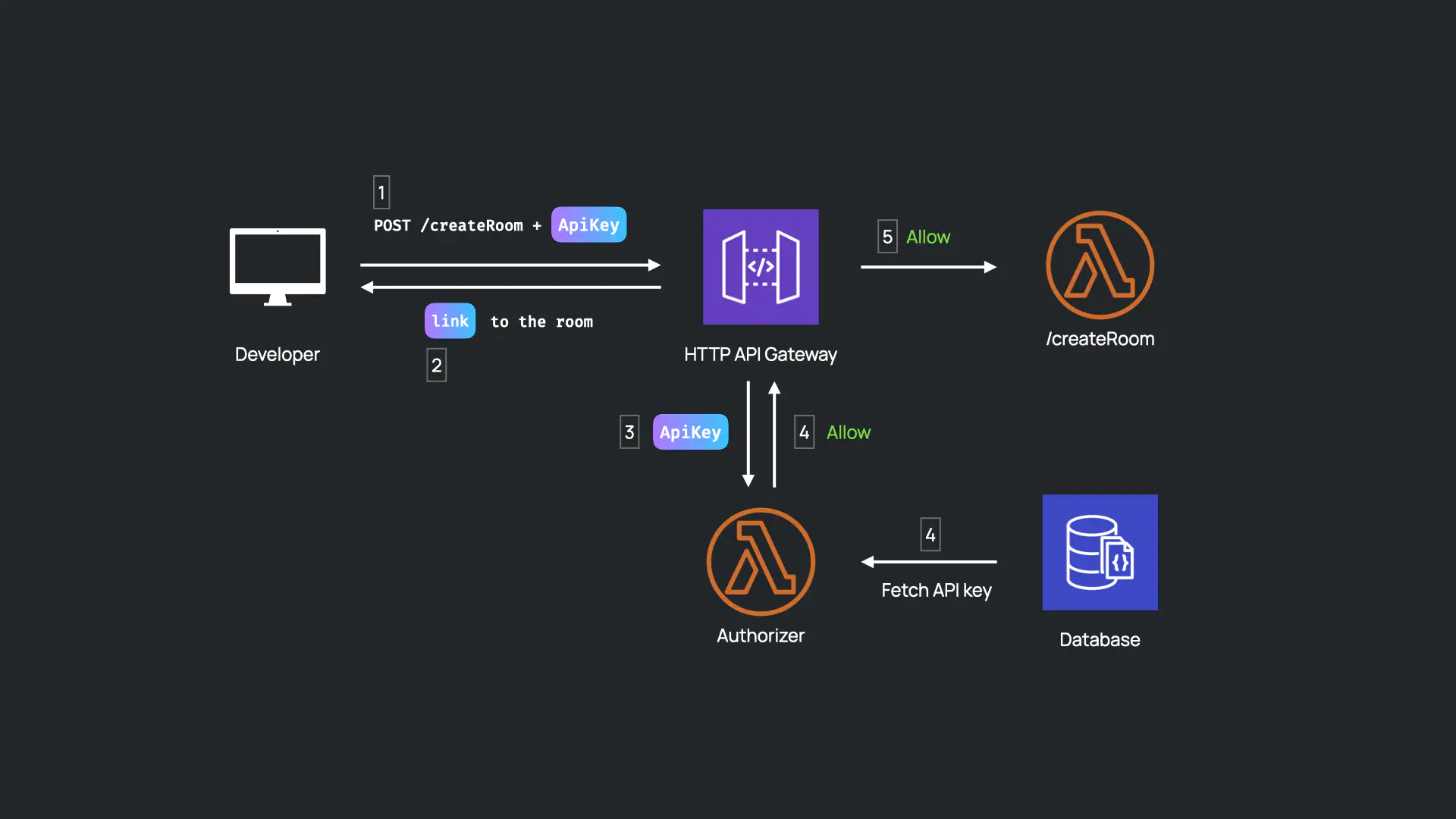

To secure our infrastructure, we decided to require the use of an API key. During otter deploy, an API key is generated, stored in a new table in DynamoDB, and displayed in the CLI. An authorizer Lambda is attached to both the HTTP API gateway and the Websocket gateway; this Lambda checks that the API key in an incoming request matches an API key stored in the database, and allows and denies traffic accordingly.

Given that Otter is a drop-in service for an application, we made an assumption that the developer using Otter will already have their own backend infrastructure. To create a video call, a developer would include this API key when making a request from their backend to the Otter API’s “createRoom” route.

However, another problem surfaces concerning the Otter Web App. When a user clicks on the link to join a video call, the Otter Web App needs to send multiple requests to the Otter API; these requests must include an API key. However, the Otter Web App is exposed to the end user. How can the Otter Web App be authenticated, without exposing the API key to the end user?

To address this, we decided to implement JSON Web Tokens. A JSON Web Token (JWT) is a type of token used to securely transmit information (i.e. an API key) between parties.

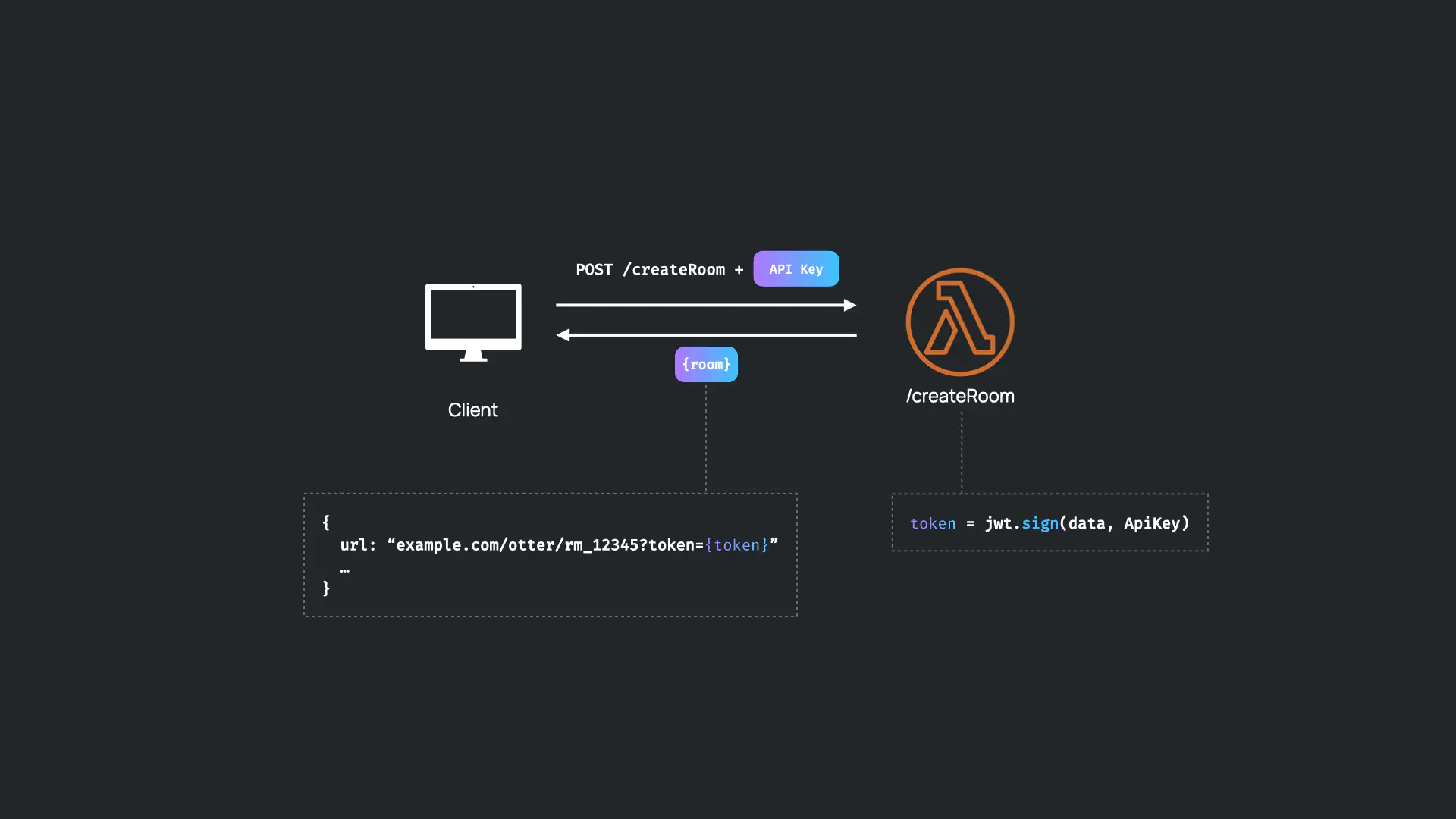

When a request is sent to the Otter API’s “createRoom” route, the “createRoom” Lambda generates a JWT using the API key in the database and responds with a link to join the video call with the JWT in the query parameters.

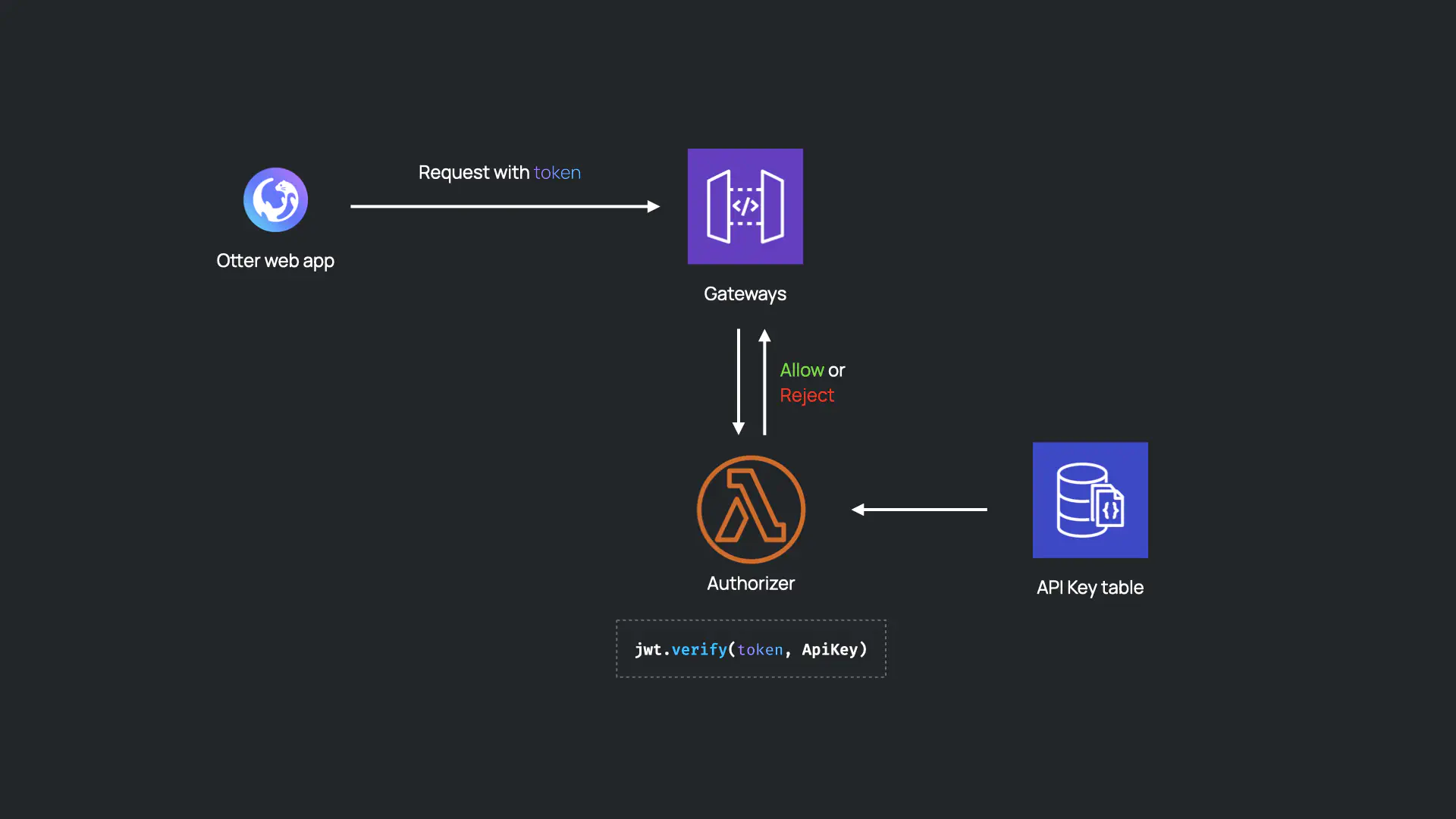

When a user clicks on the link, the Otter Web App fetches the JWT from the query parameters of the URL and includes it in requests to the Otter infrastructure. The authorizer Lambda examines the JWT from the incoming request and verifies it using the API key stored in the database.

With this solution, accessing Otter’s infrastructure requires authorization, and the API key is not exposed to the end user.

CoTURN Session State & Sticky Session

Deploying CoTURN to the cloud presented its own set of challenges. Two noteworthy ones were:

- The need for a CoTURN instance to automatically detect its assigned public IP address.

- The need to track state across multiple CoTURN instances within the AWS ECS Cluster.

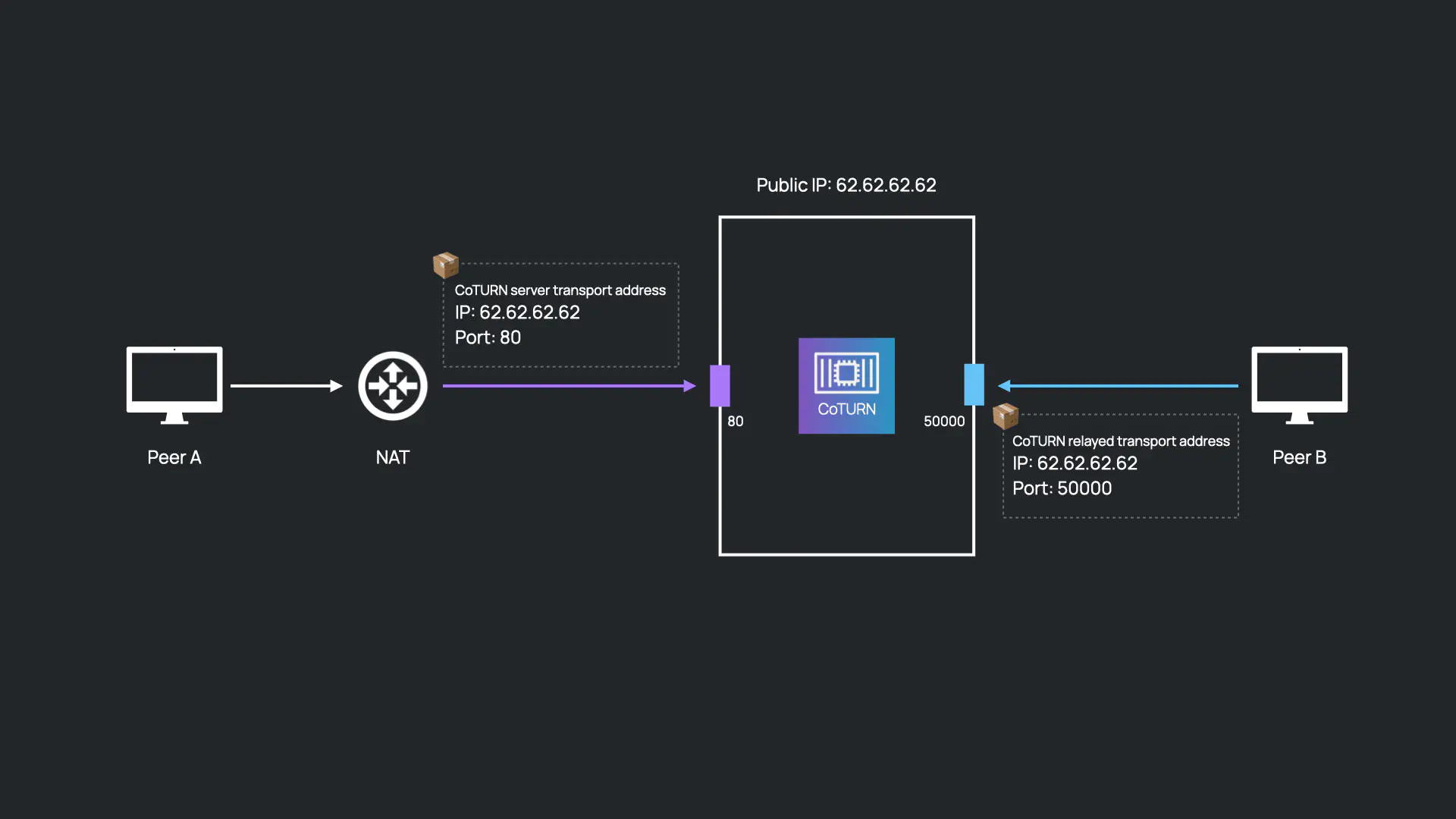

Imagine peer A and peer B want to establish a direct peer-to-peer connection, where a TURN server is required for peer A. Both peers need to send their packets to CoTURN which would then relay them accordingly, but how do the peers determine what IP address to use?

When a new instance of CoTURN is launched within the cluster, we execute a script that relies on the Domain Name System (DNS) protocol to figure out the public IP address assigned to the container. Well-established providers such as OpenDNS or Google offer special hostnames that, when resolved, will return the IP address of the caller. This could also have been done by sending an HTTP request to specific providers that offer the public IP address in their response body. However, HTTP has more overhead, and parsing the response is more time-consuming than using DNS.

Now that CoTURN is aware of its IP address, Peer A asks CoTURN if it is able to relay packets to peer B. If successful, CoTURN allocates a session for peer A. This session indicates that CoTURN is stateful and consists primarily of the following: a public IP address which represents the IP address of CoTURN; a randomly chosen port for peer B to use to relay its packets to peer A; and a permission which indicates that only packets from peer B should be relayed back to peer A and all other packets should be discarded. The permission is primarily a security feature.

Once the session is allocated, peer A can send CoTURN’s IP address and chosen port to peer B through the signaling channel as usual.

To make sure that CoTURN has access to the client’s state when handling requests, we opted for sticky sessions applied by the network load balancer. The network load balancer assigns each client a CoTURN instance from the cluster for the duration of the connection. This was a straightforward approach and did not require any changes to our application. However, if an instance fails all associated client state is lost. Furthermore, sticky sessions can impede the ability of the network load balancer to distribute the incoming traffic evenly due to client state being present in the individual instances.

Another option could have been to use a key-value store to hold all session allocation states. In this case, all CoTURN instances need to have access to this store to retrieve the client’s state on each request. The individual CoTURN instances become stateless and there is no need for the network load balancer to apply sticky sessions anymore. However, the complexity of extracting the state from the instances and the increased latency to retrieve a particular client state from the store were significant enough to outweigh the benefits of this approach, especially compared to the simplicity of the sticky session approach